We’re back again this week with another set of canvassed opinions from across the worlds of comics on the effects of Generative AI on the form. Here’s our standard intro for the series…

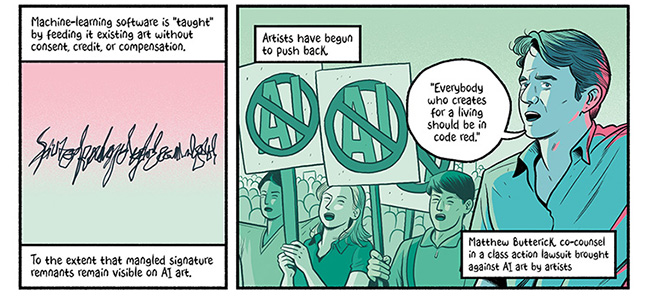

AI “art” is already encroaching on our comics spaces. There have been allegations of its use on well known mainstream properties; popular digital platforms host AI comics regardless; AI art comics-making apps are prevalent; arts institutions that should know better have offered generative AI comics-making courses; and perhaps most disappointingly established professionals have experimented/published using it. It remains an existential threat to creativity and to our comics community.

And supporting community is, of course, a large part of what Broken Frontier is about. So, with that in mind, some weeks back we put out a call on social media for contributions to a potential series of articles discussing the subject. To repeat our stance from that initial shout-out we’re not here to create “balance” on this issue. We’ve made it very clear where we stand at Broken Frontier on generative AI. This is about creating a record for posterity of the thoughts of those in our scene experiencing, or concerned about, the negative impact of the theft machine on their livelihoods, practice and the future of the form/industry in general.

Art by Craig Yoe

If you would like to contribute please refer to that guidelines article here. We would particularly like to hear from more actual comics artists to make this a broader and more relevant series! And don’t be concerned if your comments aren’t in any particular entry in the series. We’re splitting things up into manageable reading chunks week by week. So here’s some more thoughts on: What would you say to those advocating for the use of generative AI in the medium? And what are your wider concerns about its use in socio-political, educational or environmental terms?

Read all parts in the series in one place here

Joey Esposito, writer The Pedestrian (Magma Comix): As far as I’m concerned, if you advocate for the use of generative AI in any capacity, but especially in art, you are either a fascist, a moron, or an unabashed hack. Probably all three. Setting aside the fact that the tech goons touting it are lying through their teeth. Setting aside the fact that it doesn’t actually do what they claim it can do, or “will” do. Setting aside the fact that it is a scheme of the highest order, just like NFTs and cryptocurrency before it. Setting all of the BS hype and speculation aside, Generative AI is fundamentally a fascist tool meant to devalue human thought and expression. It is being used to stifle curiosity, obscure truth and fact, seed distrust in science, and homogenize art to the point of toothless, vacuous “content.”

All of this doesn’t even touch on the fact that it is actively destroying the planet. The only one we’ve got. There is no wiggle room or nuance. It is a tool promoted by evil people, used for an evil endgame. And if you call yourself an artist but still support this nonsense? You’re a traitor of the medium and humanity itself. To quote Batman from The Dark Knight Returns, “This is the weapon of the enemy. We do not need it. We will not use it.”

Art by Dan Membiela

Véronique Emma Houxbois, cartoonist Transcription, Krakoa is Burning: I don’t know that I have much to say to anyone using or advocating for it. Persuasion isn’t really the game I play when I write these days. I tend to think more in terms of creating a self selection mechanism, of seeking clarity and setting the stakes on an issue to say, you know, if this is your position, these are the outcomes you’re accepting. As an example, if Dan Panosian is comfortable putting out work as clearly compromised as his cover for Catwoman #73, then i don’t know what else there is to say to him about it.

On the environmental front, I think it really comes down to either you care or you don’t. Either you already had enough concern over climate change and so on to opt out of this stuff or you didn’t, I don’t know that you can effectively convert people that way. I think pressing that case goes the same way that it did for crypto. People were saying you’re consuming this or that amount of energy to calculate this blockchain nonsense and the response was well we’re just doing it until this other model becomes viable.

You can never dissuade people from that mentality and say well then why are you using this at scale now and perpetuating the current level of harm now? What’s so urgent that cannot wait until a more efficient model can be deployed at scale? Its FOMO, the imagined prestige of being an early adopter, perceived profit, and so on. There is some promise shown by the recent Chinese LLM built on open source software that this kind of thing can be done at the fraction of the compute power consumed by Open AI and other American incumbents, but that isn’t the here and now where the damage is being done in real time.

So like, I’m sensitive to the environmental issue and it’s one of many reasons I don’t use this stuff for anything whatsoever, but I don’t know how to make a persuasive case for something people should already be concerned about and have access to the science on.

What I can speak to is the social and political side of all this. Which, from my view weirdly coincides with most of what i had to say about the fracas over a demonstrably racist comic strip by Alex Graham. In that instance I had said that the choices she had made in the style she drew the comic in, the tone of it, and the company she keeps all conspired to completely separate her from her work. I didn’t have to see it to get it. There was an entire nexus of gestures and meanings that she had accessed in creating it that were overdetermined in the public imagination to the point that she was ultimately irrelevant.

And generative AI is a blunter, more obvious manifestation of this because, you know, these image generators like Midjourney or whatever are plagiarism machines. You’re always accessing an approximation of someone else’s work. I wrote about the supposed Ghibli filter at the time and that thing, like, it just looks like shit. You can’t name a single film that came out of that studio that looks like that, that has grainy Zack Snyder sepia colors say nothing about the crummy cartooning. it just floats along on a collective lie that people agree to in order to feel like they got something out of it.

What struck me at the time was that people on Instagram were sending prompts of their favourite wrestlers to them, hoping to get shared to the wrestler’s story. The relationship between a wrestler and their fanbase is a pretty sacred thing, in my opinion. There’s an exchange there because wrestlers are in the business for the fans in a very direct way, they exist to appeal to the viewers to advance their careers and so there is a kind of exchange there between a wrestler appreciating the energy that their fans put into their signs and fan art and so on and the fan feeling seen by their hero. In that sense, John Cena is probably the purest professional wrestler to ever exist due to his tireless, record-breaking work for the Make a Wish Foundation.

But generative AI is authorless, indistinguishable from any other prompt. You can’t hang out at a venue hoping to see Tiffany Stratton or Iyo Shirai or God forbid, Cody Rhodes, and expect them to recognize you as the person who sent them the Ghibli filter prompt on Instagram. You could if you had drawn it in all of your own idiosyncratic glory or had hand made a sign to wave at the venue, but you de-person yourself when you’re using generative AI. You’ve made yourself anonymous and completely interchangeable with any other user of the platform. There’s something gothic and depressing about that for me when it comes to fan art and things of that nature, but it gets a lot more sinister when you think about who it is that would seek out the depersonalization of their work by using generative AI.

A social media analytics firm can tell us that the infamous Taylor Swift images that circulated online during last year’s Superbowl seem to have originated on 4chan and no further. Multiple outlets have said they came out of a game on the message board of trying to find ways to get past the guardrails on generative AI services meant to block the creation of that kind of explicit image, but there’s no definitive attribution, no author. And that’s a compelling thread to pull on because this is the kind of thing with a social cost attached to it. You generally pay a social cost for posting racist, misogynist, or otherwise bigoted or exploitative content online. But that only happens when an attribution can be made.

So it’s no accident that generative AI is being employed to disseminate bigoted and inflammatory content in line with the agendas of public figures like President Trump or Elon Musk. Producing this work anonymously in the hopes of laundering it up through the culture to high visibility public figures has been a goal of the online extreme right for quite some time. Bellingcat contributor and podcaster Robert Evans has done a great deal of compelling work analyzing how the extreme right operates online, and the utility of generative AI to their immediate goals is startlingly clear.

As one example, Evans outlined on his podcast Behind the Bastards how far right agitators were disseminating a set of clown memes to signal to each other and to hopefully acquaint people with their imagery as a tactic to lure in and recruit new blood. The clown memes mostly looked like a variant on Matt Furie’s Pepe the Frog but the “honk honk” messaging with them was intended to be read as a covert “Heil Hitler” in line with other, older coded language like “88.”

In the same episode, Evans pointed out darkly that “Nazis read Adbusters now,” as part of an observation about how they’ve come to use memes as an ideological vehicle along similar lines to how left wing counter cultures have previously used tactics like culture jamming. So, you know, whether people have really reckoned with it yet or not, we’re living in a world where Nazis have been given a tool they can use to disseminate their views at scale while largely avoiding having to pay the social cost of having their messaging attributed to them directly.

If you step back and look at this aspect of generative AI alongside how it’s being used to exert downward pressure on cultural workers and journalists, to automate their work away from them in an effort to drive down wages and circumvent organized labor, a larger picture emerges of how the technology as it’s being deployed by major American firms like Open AI and its network of investors like Microsoft.

I’m sure the question of whether or not artificial intelligence is somehow congenitally fascist is a highly debatable one, but I’m not all that interested in whether large language models developed in societies with different class structures or incentives could be utilized towards more positive ends. What I’m interested in is observing how the field is developing in the here and now. How so-called artificial intelligence has been crafted in service to power and how its been set up to advance the aims of fascist power structures and ideological goals.

From ‘I’m a Luddite’ by Tom Humberstone, available to read here at BF

H.L. Roberts (Sticker Knight): I think those advocating for the use of generative AI, such as the UK Government, are the problem. The more and more people use generative AI, the more normalised it’s going to become in society. We’ve seen from sources that using AI emits a large Carbon footprint which isn’t good for the planet; people are generating harmful and illegal content; and people are generating propaganda via art and video, to fuel war, hate, racism, etc, that people are believing because AI has become more advanced than ever before.

A personal nitpick of mine regarding my concerns of AI is the government’s push of AI on the education system, which is infuriating on another level, because kids don’t learn from being sat down at a computer reading off a screen, they learn to an extent through experience and being taught verbally by teachers who have that knowledge. In short, our governments need to crack down on these companies that are making these AIs such as Sora, ChatGPT, Grog, Veo 3, etc because there is such power and destruction to be abused with AI.